The Principal-Agents Problems: AI Agents Have Incentives Problems on Top of Cybersecurity

I had originally planned to write this as a single post, but it keeps growing as more relevant news stories come out. So instead, this will become a series of stories on the competing incentives involved in creating “AI agents” and why that matters to you as the end user.

I had originally planned to write this as a single post, but it keeps growing as more relevant news stories come out. So instead, this will become a series of stories on the competing incentives involved in creating “AI agents” and why that matters to you as the end user.

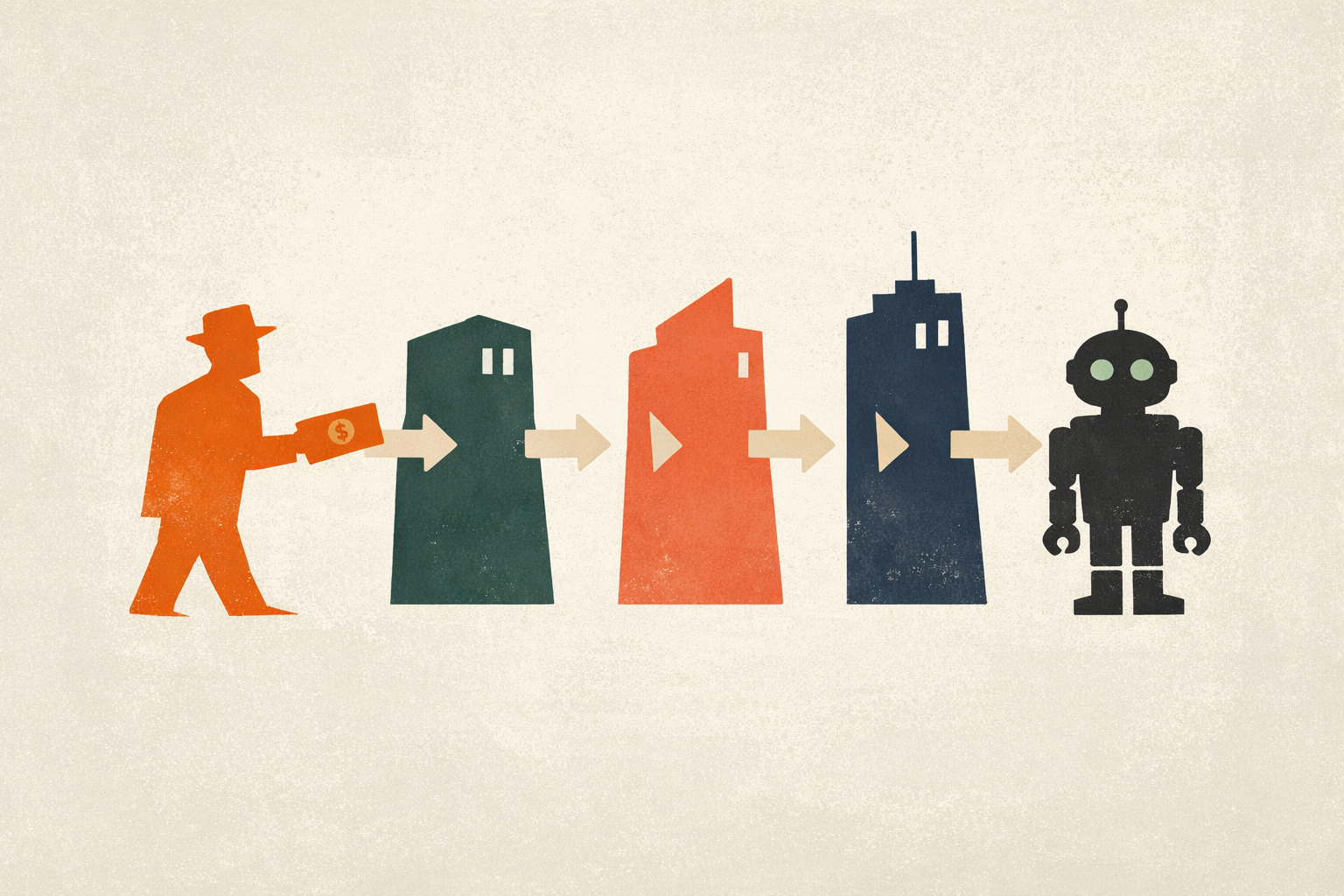

The economic principal-agent problem is the conflict of interest between the principal (let’s say “you”) and the agent (someone you hire). The agents have different information and incentives than the principal, so they may not act in the principal’s best interests. This problem doesn’t mean people never hire employees or experts. It does mean we have to plan for ways to align incentives. We also have to check that work is done correctly and not take everything we are told by agents at face value.

The principal-agent problem applies to many layers of actors, not just the AI. The “AI agent” going out and buying your groceries or planning your sales calls for the next week is the most obvious “agent” you hire, but this also applies to the organizations involved in providing you the AI agents.

AI agents may be provided by a software vendor, like Salesforce or Perplexity. The AI models running the AI agents are provided by an AI lab, like OpenAI or Anthropic or Google. The vendors and the labs have different incentives from each other and from you. This could impact the quality of service you receive, or compromise your privacy or cybersecurity in ways you wouldn’t accept if you fully understood the tradeoff. In this series, I’ll go through concrete examples of how the principal-agent problems shows up in stories about issues with AI agents.

On Friday, December 5, 2025 we will have a kick-off CLE event in central Iowa. If you are in the area, sign up here! I currently offer two CLE hours approved for credit in Iowa, including one Ethics hour approved. Generative Artificial Intelligence Risks and Uses for Law Firms: Training relevant to the legal profession for both litigators and transactional attorneys. Generative AI use cases. Various types of risks, including hallucinated citations, cybersecurity threats like prompt injection, and examples of responsible use cases. AI Gone Wrong in the Midwest (Ethics): Covering ABA Formal Opinion 512 and Model Rules through real AI misuse examples in Illinois, Iowa, Kansas, Michigan, Minnesota, Missouri, Ohio, & Wisconsin.