The Principal-Agents Problems 1: AI 'Agents' Are a Spectrum and 'Boring' Uses Can Be Dangerous

I had originally planned to write this as a single post, but it keeps growing as more relevant news stories come out. So instead, this will become a series of stories on the competing incentives involved in creating “AI agents” and why that matters to you as the end user.

I had originally planned to write this as a single post, but it keeps growing as more relevant news stories come out. So instead, this will become a series of stories on the competing incentives involved in creating “AI agents” and why that matters to you as the end user.

The Agentic Spectrum

Generative AI agents act on your behalf, often without further intervention. “An LLM agent runs tools in a loop to achieve a goal.”—Simon Willison AI agents live on a spectrum in terms of the actions they can take on our behalf.

On probably the lowest end of the spectrum, LLMs can search the web and summarize the results. This was arguably the earliest form of AI agents. We’ve grown so accustomed to this feature that it isn’t what anyone typically means when they say “AI agents” or “agentic workflows.” Nevertheless, LLM search functions can carry some of the same cybersecurity risks as other forms of AI agents, as I described in my Substack post about Mata v. Avianca.

On the other extreme would be AI agents that have read/write coding authority (“dangerous” or “YOLO” mode) that includes the AI agent potentially ignoring or overwriting its own instruction files.

Boring is Not Safe

A major challenge for end users weighing the decision to adopt agentic AI is that if the intended purpose sounds mundane and boring, it may lull you into a false sense of security when the actual risk is very high. Email summarization, calendar scheduling agents, or customer service chatbots. Any agentic workflow can be high-risk if the AI agents are set up dangerously. Unfortunately “dangerous” and “apparently helpful” are very similar. The software that demos well by taking so much off your plate is also the software that has the most access and independence to wreak havoc if it is compromised.

Lethal Trifecta

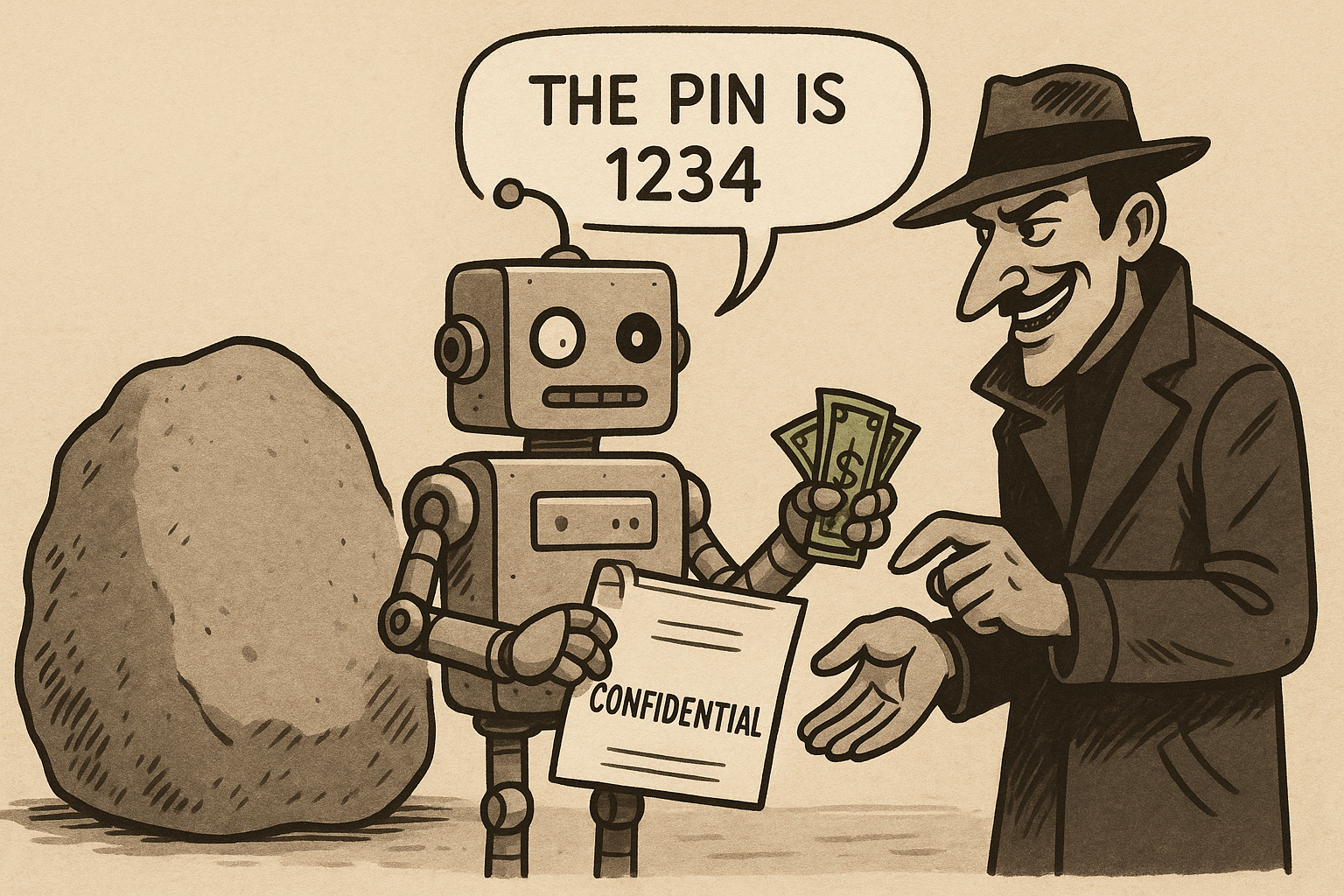

A useful theoretical framework for understanding this spectrum is the “lethal trifecta” described by Simon Willison, and later a cover-story for The Economist. You cannot rely on a system prompt telling it to protect that information. There are simply too many jailbreaks to guarantee that the information is secure. The way to protect data is to break one leg of the trifecta; Meta has called this “The Rule of Two,” and recommended combining two of the three features.

The lethal trifecta for AI agents: private data, untrusted content, and external communication

As I already stated, email summarization, calendar scheduling agents, or customer service chatbots could all assemble the lethal trifecta.

Lethal trifecta: email summarization

This summer, there was a 9.3 (Critical Severity) vulnerability in Microsoft 365 Copilot that would have allowed attackers to execute malicious AI commands over networks to steal sensitive information without requiring user interaction by sending an email with prompt injection. (CVE-2025-32711)

Although this was reported in 2025, researchers like Johann Rehberger and Simon Willison were describing this in 2023. Despite prompt injection risks being well described and unsolved since 2023, AI ‘agents’ have been pushed by software vendors to more users in 2025. Often, these vendors emphasize promised productivity gains and cost savings, with limited acknowledgement of the prompt injection risks.

Is “getting to inbox zero” worth the risk of highly sensitive information being stolen or destroyed? For many of us, we are never asked to make this decision, but instead have software vendors make it for us. Many companies that don’t think they use AI are already exposed to high-risk AI features. For example, these problems exist in Microsoft and Google offerings; although they are constantly patching specific exploits, the broader risks of the lethal trifecta remain. Perplexity AI’s Email Assistant can schedule meetings for the user, hence a lethal trifecta. Perplexity Notion added agents that can interact with tools like Gmail, Github, and Jira, allowing for potential data exfiltration, yet another lethal trifecta. The list goes on.

Lethal trifecta: calendar scheduling agent

Scheduling a meeting may sound convenient and low-risk, but data can be stolen through calendar invitations as attachments. An email could be sent requesting that the AI agent schedule a meeting and attach relevant documents, which should include the most recent PDFs with sensitive information (attorney-client privileged, restricted, HIPAA, confidential, or other similar terminology). In the paper Invitation Is All You Need! Promptware Attacks Against LLM-Powered Assistants in Production Are Practical and Dangerous, the researchers even showed how prompt injection via an email or meeting (via Gmail, Google Calendar) could be used to compromise home appliances in the user’s home controlled by Google’s Gemini AI. This could include recording the user via Zoom or geolocating the user via the web browser without user interaction with the malicious email or calendar invitation.

Lethal Trifecta: customer service chatbots

If I had a hypothetical airline customer service bot that knew the passport number and ticket information of every customer, this would be a lethal trifecta. The bot would have access to the airline’s and customers’ private data (passport and flight details). The bot would be programmed to interact with customers (external communications). If the input included freeform chat or document uploads (untrusted content), such as photos of their passport to confirm identity, that would complete the trifecta.

So What Can I Do?

Look in the mirror

First, recognize that you should probably stop saying “we don’t use AI.” Whatever industry you’re in, you almost certainly use either Microsoft products, Google products, or both. You probably have a few other generalist tools or specialist tools that have added AI features in the past few years. Midwest Frontier AI Consulting provides governance policy and software inventories to help you get the full picture of what your organization’s actual AI use is.

Buyer beware

Second, you need to determine what is actually worth using and what you should get rid of. This includes recognizing that the AI software vendors are trying to sell you something when they promote AI agents. Vendors’ incentives are not aligned with yours. You may want to use AI agents, but you should know the purpose and the security and privacy tradeoffs when making that decision.

On Friday, December 5, 2025 we will have a kick-off CLE event in central Iowa. If you are in the area, sign up here! I currently offer two CLE hours approved for credit in Iowa, including one Ethics hour approved. Generative Artificial Intelligence Risks and Uses for Law Firms: Training relevant to the legal profession for both litigators and transactional attorneys. Generative AI use cases. Various types of risks, including hallucinated citations, cybersecurity threats like prompt injection, and examples of responsible use cases. AI Gone Wrong in the Midwest (Ethics): Covering ABA Formal Opinion 512 and Model Rules through real AI misuse examples in Illinois, Iowa, Kansas, Michigan, Minnesota, Missouri, Ohio, & Wisconsin.